Comcast 2gig/200M Service Upgrade Issues

I recently upgraded my home Comcast internet service from 1gig/40meg to their newest 2gig/200meg service. This is a significant upgrade for Comcast as it requires some upgrades to field installed upstream amps to allow a greater amount of upstream bandwidth. I was surprised to see that the hardware work was completed in my area, but happy to give it a try.

One oddity of the service is that Comcast requires you to run their cable modem if you want to get the 2gig/200m service combined with the unlimited traffic. They specifically mention it during the sign up, and specifically mention that if you switch to your own modem that that the service will downgrade back to the 1gig service with a 1.2TB upload cap.

A day after ordering the service a new XB8 modem arrived. I am running a Ubiquity UDM Pro firewall, which has 10G SFP+ interfaces for both LAN and WAN interfaces. In my case the LAN side is connected to a Ubiquity 10G core switch that has fiber drops to other wiring closest in my house, as well as 10G connections to my office machines and 40G connections to the server racks.

The XB8 modem has a single copper 2.5G capable port (marked with the red bar), and most new 10G-BaseT SFP+ modules will down-convert to 2.5G wire speeds. I connected up the 2.5G port from the cable modem to my UDMP via an SFP+ module, and the Comcast side indicated a 2500 mbit connection. Interestingly on the UDMP software side the interface appears as a 10G interface because the SFI interface from the device to the SFP+ module is still running at 10G. I’d be curious to dig into that a bit more to see what that module does for speed conversion and throttling. With the connection up and running, and with the cable modem in the default NON-BRIDGE mode, my firewall got a 10.0.0.4 address as I would expect. Some quick speed tests from my office machine gave me acceptable >2 gig download speeds, and 100-200mbit upload speeds. Upload speeds were more volatile in part because of the end nodes for the speed test being saturated, but still overall acceptable results.

Given my use case I then switched the XB8 over to BRIDGE mode. It is a simple toggle in the Comcast interface (address 10.0.0.1) in which the modem appears to restart. After restart the UDMP got an external Comcast address (24.x.x.x) over DHCP, and internet connectivity seems great.

A few hours later I noticed the UDMP was saying the ‘internet’ connection was not working, and sure enough if I did a ping of 1.1.1.1 it would drop 90% of the packets. You could still open some web pages due to the amazing resilience of TCP but there was clearly something causing lots of packet drops.

My first thought was that the 2.5G interface might be having problems. The 10GBaseT SFP+ module I was using was quite old, so perhaps that 2.5G support was not perfect. As soon as I unplugged the connection from the SFP+ and put it into the UDMPros 1G only copper port the internet connectivity was restored and looked perfect. Ok – Time to order up a new 10GT SFP+.

5 mins later, the same internet drop happened again. I unplugged the drop and plugged it back in and it instantly fixed it. Hmm. That is odd… Is it exactly 5 mins? Stopwatch out – Yep, exactly 5 mins from re-plug-in the problem happens. Reseting the interface fixes it for 5 mins more, so my first thought was something with the UDMP interface. I reroute the UDMP interface through the core router on a dedicated VLAN so the newer core router would be the connection point for the Comcast connection, but it makes not difference. If I soft down the interface and bring it back up on the Comcast side now it doesn’t fix anything, but if I do it on the UDMP side it does. Interesting!

I did notice the problem didn’t start until I switched to BRIDGED mode, so I tried switching back out of BRIDGED mode. That fixed it. I can get back to 2.5g and everything is working great. No 5 min dropout. Could BRIDGE mode be the problem?

At this point, the network engineer in me said ‘get a pcap going’. Since I’m running through the core switch I configure another SFP+ port to be a mirror port, and then connect that port to an interface on another laptop that has Wireshark on it.

I switched the cable modem back to BRIDGED mode and started capturing from power on.

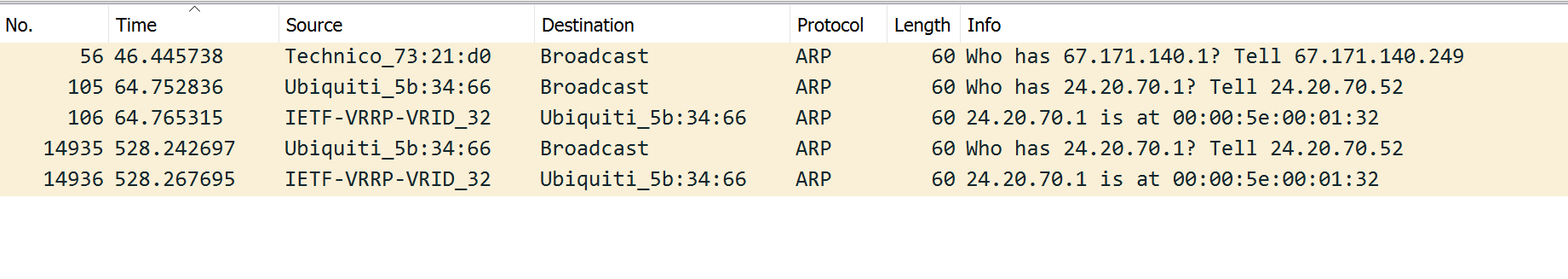

My first thought is – When does the UDMP send the ARP requests for the default gateway? Filtering on just DHCP I can see the UDMP getting an IP Address at timestamp 64, and just after that the UDMP does an ARP request for the gateway 24.20.70.1. This looks just as you would expect. One thing that does stick out is that gateways MAC address: IETF-VRRP-VRID_32. That is an indication that the other side is using VRRP (Virtual Router Redundancy Protocol). Not surprising, but a hint at the unusual behavior.

With the ARP at 64 seconds, I start doing some ICMP traffic. At almost exactly 300 seconds later (Timestamp=364s), the ICMPs start to fail. Some other traffic is still working, but this specific ICMP path is failing. Clearly something has timed out, and it seems like perhaps my MAC address has timed out in one of the paths from one of the VRRP member routers.

I SSH into the UDMP and check the ARP table, which has the entry for 24.20.70.1. I use the ‘arp -d’ command to remove that entry which causes the UDMP to send a new ARP request for 24.20.70.1. I see that traffic, and suddenly the ICMPs start working again!

I took a look at the statistics from this capture session, and you can see the outbound traffic going from the UDMP to the VRRP MAC address, but the return traffic is split between two Junipers routers. ( MAC 0xef and 0x6c). These two return paths are not equal in usage, which is not a surprise since the hashing used to pick in VRRP isn’t going to guarantee an even split in such a small set of connections.

I dug a bit more, and sure enough the ICMPs I was sending were all coming back from one of the two Junipers ( the 0x6C one ). It seems that most of the traffic from that particular Juniper gets dropped if the the ARP request does not happen (and presumably reset a MAC table somewhere along the way) every 5 mins.

Looking at the traffic from the 2 src mac for the return path confirms the traffics drops from that second Juniper after the potential MAC timeout.

The FIX:

ARP timeout are a bit complex. While there are OS level settings ( in this case you can see them in /proc/sys/net/ for the interface of interest), things like the base_reachable_time_ms don’t provide everything you need. An entry in the ARP cache might not be refreshed via ARP if an upper level protocol updates the status of that entry. That can happen if a packet is received successfully based in the use of an entry. As a result having those OS timers set to something like 30s might not actually result in a new ARP every 30s, especially on an active link. On the UDM I was able to see >20 min intervals between ARPs if traffic is flowing.

Since I need to guarantee a new ARP every 5 mins I created a CRON job that runs every 4 mins and deletes the particular arp entry for the gateway in the internet interface. In my case (the UDMPro) the SFP+ WAN interface is eth9. I added the following command to the crontab:

sudo arp -d `arp -i eth9 | awk ‘BEGIN { FS=”[ ]” } ; NR==1 {print $1 }’`

That command will delete the ARP entry for the first entry in the WAN interface table, which in this case is the default route (because the IP is .1). This is a serious hack, and not something you would want to rely on long term.

It does work, and a week later everything is working great with the ARP refresh. It is a surprising issue perhaps obfuscated by not as many people using non-bridged mode, and because Windows tends to ARP much more often. It is possible that other firewalls have lower ARP thresholds that mitigate this problem. There isn’t a magic ARP timeout that is ‘correct’ and the actual implementations vary a lot.

I talked about this issue at length with my friend Eric Rosenberry, who happens to be Director of Network Architecture at Ziply. He suggested the it could be some layer 2 network layer like EVPN in between the VRRP router and my device. Fortunately he had a few contacts who know a few other contacts that may be able to pass along this interesting problem to some of the client network engineers at Comcast. I’ll update if I hear back.

I did find after a bit of searching that other people have seen this issue with the UDM Pro and Comcast: